Generative Creativity - lecture 8:

Markov models

Introduction

Generative outputs from Cope's EMI system are modulated by models

(partly) derived from examples.

But how are the models obtained?

This lecture will look at the method of Markov modeling/chaining,

which plays a key role in a variety of sample-driven GC approaches

(including Cope).

In its simplest form, the method offers a way of building a

probabilistic model of a sequence.

N-grams

An n-gram is a continuous string of elements taken from a

sequence

This may be a sentence, a melody or a linguistic corpus.

For example, from the sentence `give me a break' we can extract various

n-grams.

- 1-grams (`unigrams'): `give', `a'

- 2-grams (`bigrams'): `me a', `a break'

- 3-grams (`trigrams'): `give me a', 'me a break'

Calculating n-gram probabilities

One way to obtain the n-gram probabilities is to identify each

individual n-gram and then compute its relative frequency in the

sequence.

But this is exponentially costly.

Instead, we can use the chain rule to build up the probabilities

incrementally.

- p(w1|w2) = p(w1) p(w2|w1)

- p(w1|w2,w3) = p(w1) P(w2|w1) p(w3|w2,w1)

- ...

- p(w1|w2,w3 ... wN) = p(w1) p(w2|w1) p(w3|w2,w1) ... p(wN|wN-1 wN-2 ... W1)

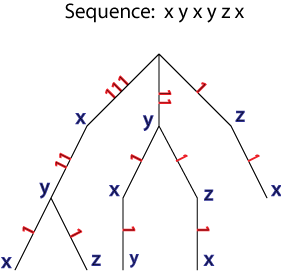

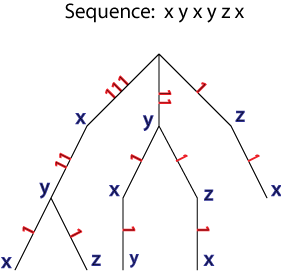

N-gram hierarchy

To obtain n-gram probabilities in a way which exploits the chain

rule, work through the sequence building up an n-gram tree

structure.

When this is done, convert the observed frequencies into the

appropriate conditional probabilities.

Markov models

A set of n-gram probabilities forms a Markov model of a sequence.

The `order' of the model is fixed by the n-gram size.

- Bigram probabilities: 1st order Model.

- Trigram probabilities: 2nd order Model.

- Quadrigram probabilities: 3rd order Model.

Markov assumption

The Markov Assumption is that conditional probabilities spanning

more than n+1 elements `make no difference'.

If we have a 1st order Markov model (a model based on bigram

probabilities), then the Markov assumption means that

- p(w4|w3) = p(w4|w3,w2) = p(w4|w3,w2,w1) ...

In other words, effects outside a certain `window' make no

difference.

Markov sequence models

- Sample sequence: a b b b c a d d a b

- 0th order Markov model.

p(a) = 0.3

p(b) = 0.4

p(c) = 0.1

p(d) = 0.2

p(a|a) = 0.0 p(c|a) = 0.0

p(a|b) = 0.0 p(c|b) = 1.0

p(a|c) = 1.0 p(c|c) = 0.0

p(a|d) = 0.5 p(c|d) = 0.0

p(b|a) = 0.5 p(d|a) = 0.3

p(b|b) = 0.5 p(d|b) = 0.0

p(b|c) = 0.0 p(d|c) = 0.0

p(b|d) = 0.0 p(d|d) = 0.5

Generating a Markov chain

To generate a variation of the sequence (from which n-gram

probabilities have been sampled) we output n-grams

according to their sampled probabilities.

This is the process of generating a `Markov approximation' or

`Markov chain'.

- Initialise the chain to be any n-gram.

- From all n-grams which extend the chain, make a selection in

accordance with sampled probabilities, and append it to the end of the

chain.

- Repeat previous step until sufficient material has been generated.

The order of the chain is the number of previous elements taken

into account in generating each new element.

Markov approximations from Shakespeare

Samples generated from the Complete Works of Shakespeare (939,067

words)

i is that othello with me and a is had no of of have a a the

my you i have and make and he sir, and thy of my so me and go

the of a an and the and him my to for to the first on in

i know my lords at this is my good angels sing and so is my

lord of his head of all the purpose to your grace! by thy

sweet self too late to think you shall i beseech you, sir, i

see you shall we shall be this to my

i pray thee, when thou hadst struck so to me as well as i do

not say there's grain of it shall be made a world of tyre act

i scene ii the palace. [enter a servant] servant o my most of

all things are like to see a mess of such a great deal with my

lord, you well, my lord. king henry vi the bird the lie, and

lie open to give her to the duke of all the duke is like a man

of the house of lancaster; and i have of it. pistol 'tis

'semper

3rd-order approximation

i would speak with those that have a sword, and so, i pray to

thee, thou shalt be my lord of westmoreland, and attendants]

king edward iv now let the general wrong of rome-- as fire

drives out fire, so noble and so noble and so am i for i have

heard him say, brutus i do not what 'twas to be revenged on

itself. cleopatra so am i for measure act i pray thee, breathe

my soul into the chantry by: and still as well as i come to

speak truest not truer office of mine at once. no, my good

lord; and in this is the man is the lord polonius my lord, i

am a room in the benefit of his power unto octavia. cleopatra

o, that which i would i might never in my life before this

ancient to the general. second senator howsoever

Markov approximations of Beatles' lyrics

you the to you don't my i a of could and i that to you the

should me come you i you i the been to you the she and to you

i the you it but you it you i she's the i the i i you please

my

you can see the girl when you can show but it's getting so

many years yes, wait till tomorrow way get back in my apart

but i know we will love you know my life i've never be mine, i

want that's why i know i know i held each

you i want me to dance with you and i feel as though you ought

to do what he left it won't be my baby, now went wrong i've

got a boat on me and so my name you know my baby everybody's

trying to be a boat on the hill sees the sheik of love chains

of love. come and you know my name you know my name well don't

get me mine

3rd-order

you want to dance with me i'm in love with another oh, when i

kiss her majesty's a pretty nice girl but i'm miles above you

tell me, i'm so alone don't bother me i'm a loser i'm a loser

and i'm not a second time that was so long bye, bye, bye. lady

madonna children at your man i wanna be your man i wanna be

let it be, you know she thinks of him steal your heart away i

got nothing to say but it's okay when i'm home we're on our

way back home we're going home we're on our love to fast but

i'm miles away and after dark people think for yourself 'cause

it's going fast but i'm miles and my feet are hurting all the

lonely people where they all the lonely people where they all

my heart love i'll give you

Markov chain applet

Using Markov chains to generate music

Markov chaining is particularly useful for generative music and is very

widely used.

In an early example, Harry F. Olson at Bell Labs used Markov chains

in the 1950s to analyse the music of American composer Stephen

Foster, and generate scores based on the analyses of 11 of Foster's

songs.

Lejaren Hiller used a computer at Princeton in 1955 to generate the

Illiac Suite (the first genuine case of music GC, according to

Geraint Wiggins). Combined Markov chaining and application of rules

of 16th century counterpoint. There are many good websites on this

including

http://www.music.psu.edu/Faculty%20Pages/Ballora/INART55/illiac_suite.html

and http://www.lim.dico.unimi.it/eventi/ctama/baggi.htm

Lejaren Hiller and Robert Baker also worked with Markov processes to

produce their `Computer Cantata' in 1963.

Summary

- Calculating n-gram probabilities (the chain rule)

- Generating Markov approximations (chains)

Resources

Exercises

- Generate a 1st order Markov model for the sequence: `x z z y x x z y'

- Use the 1st order model to generate a new sequence that is

at least twice the length of the original.

- Specify a sequence whose 1st order Markov model might yield

the chain `a b b a'. The sequence you specify must be

something other than `a b b a'.

- The following text is a word-level Markov text: guess it's origin and

order. `Nevertheless the centurion saw what was I ever wont to haunt.

Now the body of his handmaiden: for, behold, your sheaves stood round

about.'

- Let's say we use the chain-rule to discover the 3rd-order n-gram

probabilities for a certain text. If the text is 1000 words long and

contains 50 different words, how many multiplications should we expect

to do and why?

- With what probability does the Java random number generator produce a

value in the range 0.4-0.6?

- What will be the effect if an ordinary random-number generator (i.e.,

one which generates all values with equal probability) is used in the

Meservy and Fadel method of text-generation?

- What types of music do you think will be most easily generated

using Markov-chain methods?

- To improve the syntactic coherence of text generated from a

Markov model we should use higher-order statistics. Will this also

help to improve semantic coherence?

Page created on: Wed Feb 3 10:52:05 GMT 2010

Feedback to Chris Thornton